Since their inception in 2017, when face-swapping technology was first used to insert celebrity faces into explicit videos, deepfakes have evolved with remarkable speed. Early examples, such as a fabricated video of Barack Obama, highlighted the potential of this technology to deceive audiences. In recent years, however, deepfakes have transcended experimental boundaries, becoming a sophisticated weapon for malicious activities. The deepfake technology has matured to a point where it can create hyper-realistic forgeries with minimal input data, making it accessible even to amateur users. This has significantly broadened the spectrum of applications, from defrauding businesses to undermining public trust during elections. In 2024, deepfakes played a pivotal role in several high-profile cases of financial fraud and targeted disinformation campaigns, underscoring their rising threat.

This analysis delves into the mechanisms behind deepfake creation, their fraudulent applications, and the underground economy fueling their proliferation. It also highlights the critical need for vigilance, technological countermeasures, and public awareness to combat this rapidly evolving threat.

Key Insights:

- Deepfake technology has advanced from rudimentary audio visual content to highly realistic fabrications, significantly increasing its potential for deception and misuse.

- The availability of open-source tools and low-cost services on underground forums and mainstream platforms has made deepfake technology accessible to malicious actors with minimal technical expertise.

- Deepfakes are used in social engineering fraud, financial scams, disinformation campaigns, and electoral interference, highlighting their integration into broader malicious operations.

- Deepfakes undermine public confidence by amplifying disinformation and as delegitimizing trusted sources, thereby threatening democratic processes.

- As deepfake technology becomes more advanced and accessible, its use in coordinated attacks and disruptive operations is likely to grow, requiring organizations to enhance detection and mitigation strategies.

The Process Behind Deepfake Creation

The process of creating a deepfake can be divided into three distinct stages:

1. Data Collection: A dataset comprising images and videos of the target individual is gathered. Sources include social media platforms, publicly available videos, or other repositories. The diversity and quality of this dataset directly influence the realism of the resulting deepfake.

2. Model Training: The collected data is used to train a machine learning model to accurately replicate the facial features, expressions, and voice (if applicable) of the target.

3. Deepfake Generation: Once trained, the model overlays the target’s face onto another video or voice onto an audio file, producing content that appears authentic but is entirely fabricated.

The quantity and quality of input data significantly impact the realism of a deepfake:

- Minimum Requirement: 1–2 minutes of video or audio, though this is usually insufficient for creating a convincing deepfake.

- Optimal Data: 5–10 minutes of varied video or audio content typically yields believable results.

- Ideal Dataset: Over 10 minutes of footage in diverse scenarios or audio with variations in tone and speed ensures high-quality deepfakes.

Assessing the Risks Posed by Deepfakes

Fraudulent Applications of Deepfakes in Business and Personal Contexts:

Deepfakes pose a serious threat in multiple domains, particularly in fraud and exploitation:

- Financial Fraud: Fraudsters can use deepfakes of executives or employees to authorize fraudulent transfers. We already have notable examples of the use of deepfakes for financial fraud. In January 2024, an employee of a company based in Hong Kong reportedly fell victim to a deepfake-enabled fraud which led to a total of EUR 23.75 million (HKD 200 million). After the employee received an email purportedly from the company’s chief financial officer (CFO) stating the need for confidential transactions, he had been duped into attending a video conference call with fraudsters impersonating the CFO through deepfake technologies. In February 2024, Bloomberg reported that the Romanian central bank governor has been the target of a deepfake video showing the bank’s chief promoting fraudulent financial investments.

- Impersonation: Deepfakes can defeat facial recognition systems, enabling unauthorized access to sensitive accounts or infrastructure.

- Extortion: Fabricated compromising videos can be leveraged for blackmail, coercing victims into paying ransoms.

- Financial Market Manipulation: Synthetic content portraying false economic announcements or executive statements can be used to manipulate stock prices, disrupting financial markets.

- Cryptocurrency Fraud: Threat actors create videos featuring deepfaked personalities, such as a journalists or officials seemingly endorsing cryptocurrency scams.

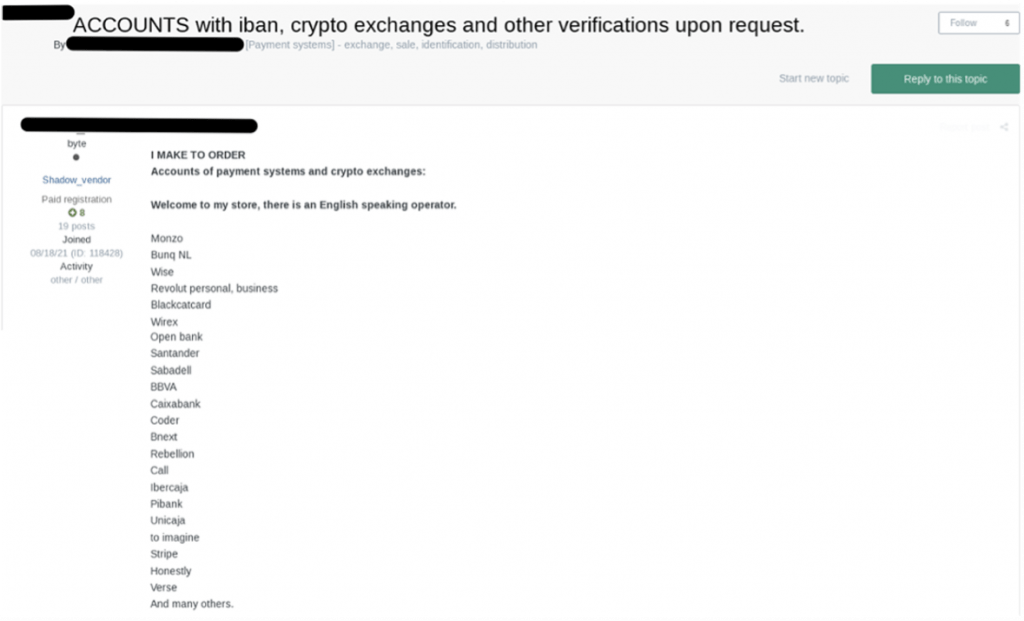

- AML and KYC Circumvention: Threat actors advertise services to bypass Anti-Money Laundering (AML) protocols, unlock frozen accounts, and meet Know Your Customer (KYC) verification requirements using deepfake-generated IDs or selfies. Notably, deepfake technology is leveraged by fraudsters to withdraw funds from banking drops, which are fraudulent bank accounts used to deposit stolen funds. For example, in underground forums, QuoIntelligence found such services advertised for 25 to 30 % of the recovered account balance. Threat actors are providing upon request accounts with IBANs from major banks such as Santander, BBVA, and Caixabank as well as crypto exchange access and selfies and ID photos tailored for verification purposes.

Deepfakes as Tools for Disinformation and Media Manipulation:

Deepfakes present a significant threat to the integrity of information and trust in what we see and hear. Risks in the realm of disinformation include:

- Political Manipulation, Including Electoral Interference: Deepfake videos or audios can tarnish reputations or spread false narratives, potentially influencing election outcomes and destabilizing governance.

- Defamation: Public figures may be targeted with defamatory deepfake content aimed at damaging their credibility or reputation.

- Media Landscape Delegitimization: Deepfakes can be weaponized to delegitimize trusted media outlets, amplifying distrust in factual reporting and enabling the spread of falsehoods.

Deepfake Services on the Deep and Dark Web

Overview of Deepfake Services on Underground Forums

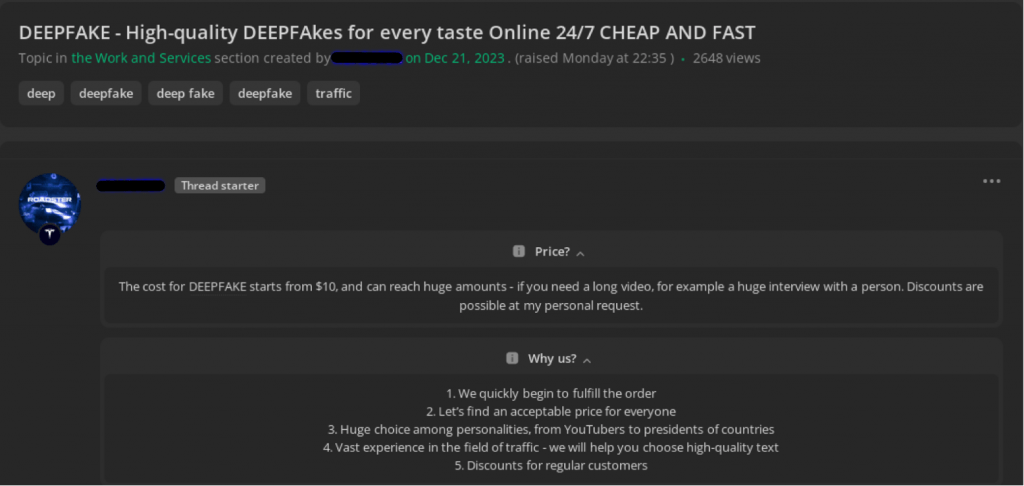

An analysis of deepfake-related services on forums such as “Zelenka” reveals a thriving marketplace offering high-quality deepfake videos at low prices. For instance, a deepfake video featuring public figures like YouTubers or world leaders is available for just $10.

Other services offered on these platforms include:

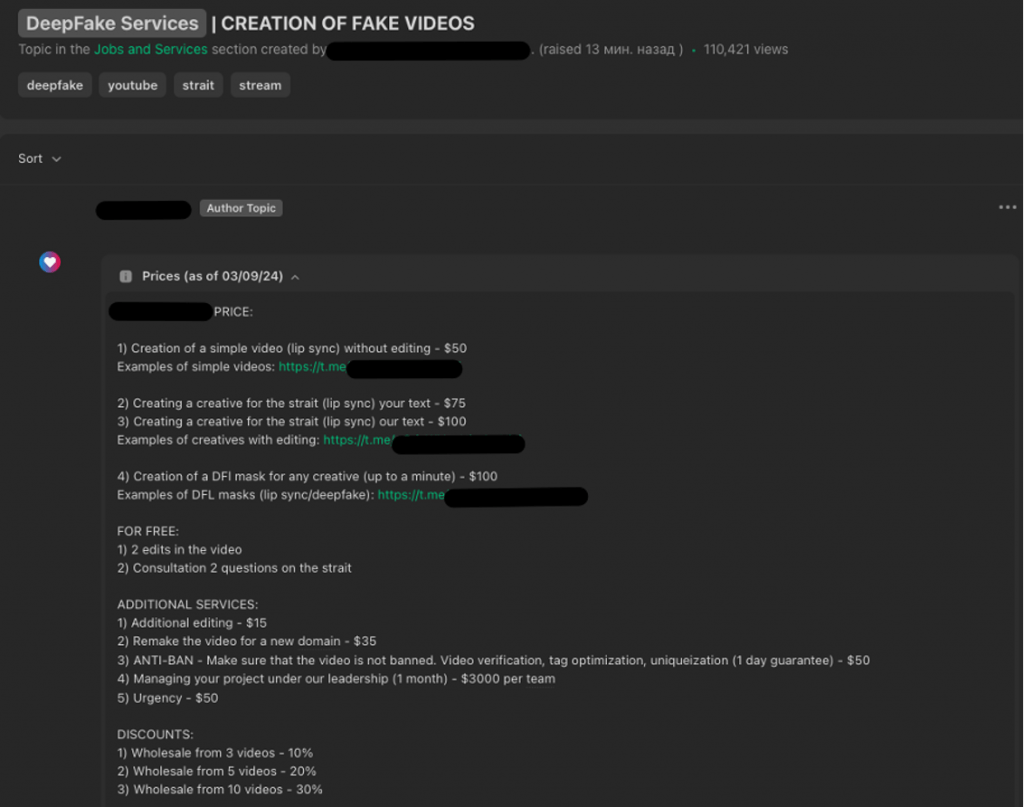

- Lip Syncing Services: Synchronizing lip movements with recorded voices, priced between $50 and $100, depending on complexity.

- Custom Deepfake Videos: A one-minute video can cost $100.

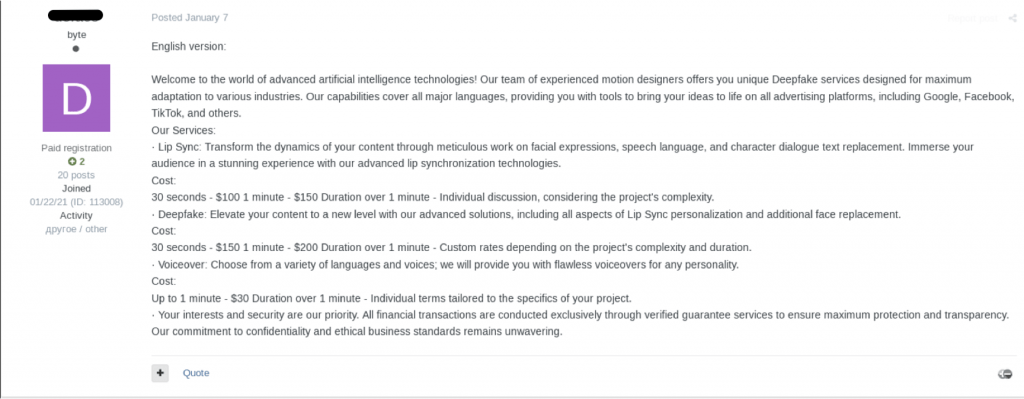

- In private, more reputable forums threat actors provide advanced AI-driven services targeting advertising platforms like Google, Facebook, and TikTok. These offerings include:

- Lip Sync Videos: $150 per minute.

- Deepfake Videos: $200 per minute.

- Multilingual Voice Generation: Custom voice services in various languages and styles for $30 per minute.

These platforms also cater to clients seeking assistance in deploying deepfake content on advertising platforms using techniques such as AdCloaking. AdCloaking involves displaying one version of content to ad network approval systems while showing a different, often malicious, version to users. This technique helps circumvent advertising policies to promote fraudulent or restricted content.

Once confined to underground forums, deepfake services have expanded to platforms like Telegram, where they are readily accessible. Additionally, the availability of open-source tools lowers the barrier for creating deepfakes, empowering both malicious actors and amateur users.

Deepfake in the Age of Disinformation

Throughout 2024, numerous deepfakes surfaced in the context of this a pivotal year for electoral processes. These deepfakes were disseminated by independent individuals, political parties, and foreign operatives, fueling both misinformation and disinformation.

The phenomenon was first observed in January during Taiwan’s presidential election. We identified up to five deepfakes mimicking pro-independence Democratic Progressive Party (DPP) candidates and a US congressman. These efforts have likely been orchestrated by Unit 61716 of China’s People’s Liberation Army, which conducts psychological warfare campaigns targeting Taiwan.

In the US, deepfakes also played a significant role during the electoral race. In January, a Democratic political consultant reportedly organized fake robocalls imitating President Joe Biden’s voice, discouraging participation in New Hampshire’s Democratic primary. In July, Elon Musk shared an AI-generated video clip on X showing Vice President Kamala Harris celebrating Biden’s withdrawal from the race. In the video, Harris referred to herself as a “diversity hire,” “deep state puppet,” and “incompetent.” The clip, shared without a clear disclaimer, amassed over 135 million views.

Elsewhere, in Georgia, the non-governmental and non-profit organization GRASS FactCheck documented and reported several deepfakes ahead of the country’s parliamentary elections.

Deepfakes used in disinformation campaigns during elections commonly exploit vulnerable narratives, such as rumors, biases, defamatory content, or controversial political issues. These narratives are amplified via coordinated efforts on social media platforms using inauthentic accounts to maximize visibility and impact.

While these campaigns have yet to demonstrate a significant effect on the outcomes of elections, they are likely to persist as disinformation operatives continue experimenting with deepfakes. Even when failing to alter election results, such tactics may undermine trust in institutions and mainstream media, potentially eroding public confidence in democratic processes.

Detecting Deepfakes

Artifacts in face and voice manipulation technologies highlight the limitations and challenges of these techniques, often resulting in visual or auditory inconsistencies that betray their synthetic origins:

Artifacts in Face Manipulations:

- Visible Transitions: In face-swapping, noticeable artifacts often appear around the edges of the face. Mismatched skin tone or texture and remnants of the original face (e.g., extra eyebrows) may become visible.

- Blurry Details: Sharp features like teeth and eyes often lack definition, appearing blurred on closer inspection.

- Expression and Lighting Issues: Limited training data can hinder accurate representation of facial expressions and lighting. Rapid head turns or side profiles can result in blurriness or other visual errors.

Artifacts in Synthetic Voices:

- Metallic Sound: Synthetic audio may have an unnatural metallic quality.

- Mispronunciation: Text-to-speech (TTS) systems can mispronounce words, especially when trained in one language and used in another.

- Monotony: Poor training data can result in monotonous speech with little emphasis on words.

- Incorrect Diction: Forgery methods struggle to replicate specific vocal characteristics like accents and stress patterns accurately.

- Unnatural Audio: Input data differing significantly from training data may result in unnatural sounds, such as during lengthy text processing or handling silences.

- Processing Delays: High-quality synthetic voices often require semantic content to be pre-processed, leading to noticeable time delays.

Conclusions and Recommendations

- Deepfakes have progressed from visibly synthetic media to highly convincing and realistic fabrications in a relatively short period. Their increasing sophistication pose significant threats to organizations, individuals, and the political realm.

- The widespread availability of open-source tools and affordable deepfake services on underground forums and mainstream platforms has democratized the creation of deepfakes. This accessibility empowers malicious actors with limited technical expertise to engage in disruptive activities.

- The use of deepfakes in social engineering fraud, defamation, disinformation campaigns, and election interference underscores their growing integration into a wider range of malicious operations.

- Deepfakes contribute to a growing erosion of trust in media and institutions by amplifying misinformation and disinformation.

- As the technology becomes more advanced and accessible, the likelihood of deepfakes being used in social engineering attacks against organizations is increasing.

To reduce exposure to deepfake threats, we recommend organizations to:

- Establish secure communication channels for sensitive discussions and decisions to prevent exploitation through deepfake impersonation. Restrict access to sensitive systems and data to only those who require it, minimizing potential exploitation.

- Develop a comprehensive incident response plan for deepfake-related threats, including protocols for verifying the authenticity of content which can leverage AI-powered tools for detecting deepfakes.

- Educate and train personnel to identify and report deepfake attacks. Update training on a regular basis to keep pace with advancements in deepfake technology.

- Identify risk users within organizations based on their visibility, level of access to sensitive information and criticality of their position. Risk users include individuals with a high public profile or media presence and employees handling sensitive information, critical to operations, or decision-making processes, such as C-level executives, IT administrators, board members, and financial officers.

- Conduct risk assessments periodically to evaluate the organization’s vulnerability to deepfake threats and update mitigation strategies accordingly. Such assessment should encompass evaluating risk users’ online presence, including social media activity and exposure in professional or personal contexts.

- Simulate potential attack scenarios to test and improve organizational defenses.

Written by: